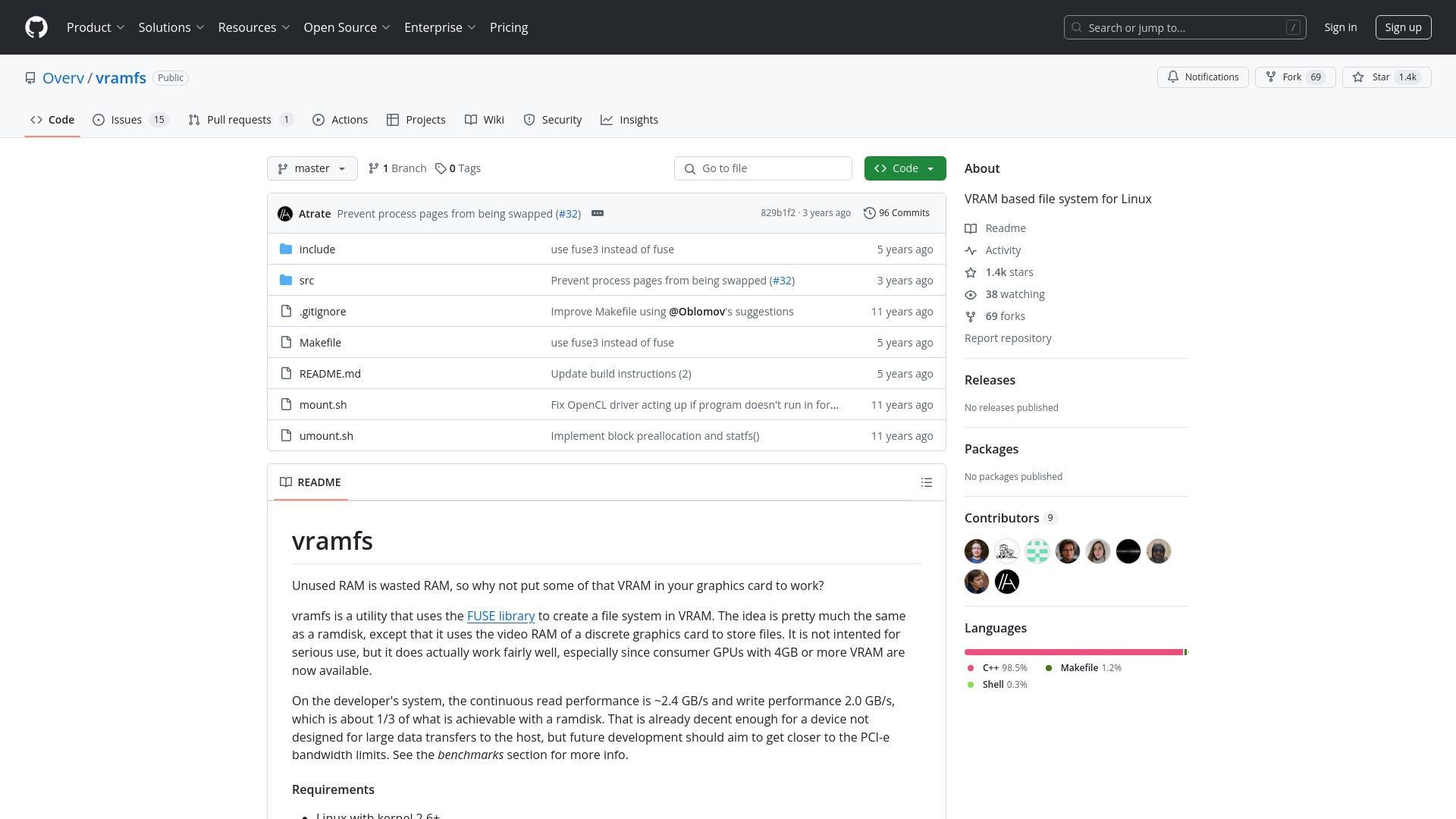

In the world of computing, unused resources represent missed opportunities. This philosophy has led to the development of tools like vramfs, a utility that transforms unused video RAM (VRAM) from graphics cards into functional file storage using the FUSE (Filesystem in Userspace) library. While the project itself isn't new, it continues to spark interesting discussions about alternative storage solutions and the creative repurposing of hardware components.

Performance Limitations and Alternatives

The current implementation of vramfs achieves read speeds of approximately 2.4 GB/s and write speeds of 2.0 GB/s, which some community members point out is comparable to modern NVMe SSDs rather than being significantly faster. These benchmarks were obtained on relatively dated hardware (Intel Core i5-2500K with an AMD R9 290 GPU), leading to speculation that performance could be substantially better on modern systems with PCIe 4.0/5.0 and newer FUSE implementations.

Several commenters have suggested that the FUSE-based approach introduces unnecessary overhead. One alternative proposed is using the phram kernel module, which creates a block device that bypasses FUSE entirely. Others have suggested that a proper Linux kernel module utilizing the DRM (Direct Rendering Manager) subsystem would provide better performance with proper caching, direct mmap support, and a reliable, concurrent filesystem.

Test System Specifications (from original vramfs benchmarks)

- OS: Ubuntu 14.04.01 LTS (64 bit)

- CPU: Intel Core i5-2500K @ 4.0 GHz

- RAM: 8GB DDR3-1600

- GPU: AMD R9 290 4GB (Sapphire Tri-X)

Performance Metrics

- Read performance: ~2.4 GB/s

- Write performance: ~2.0 GB/s

- Optimal block size: 128KiB (for performance) or 64KiB (for lower space overhead)

Implementation Limitations

- Single mutex lock for most operations (limited concurrency)

- All data transfers must cross PCIe bus

- Requires OpenCL 1.2 support

- Recommended maximum size: 50% of available VRAM

Implementation Challenges

The current vramfs implementation faces several technical hurdles. Perhaps most significantly, the project uses a single mutex lock for most operations, meaning only one thread can modify the filesystem at a time. This design choice severely limits concurrency and overall performance.

Another challenge is the inherent bottleneck of CPU-to-GPU data transfers. Since all reads and writes must traverse the PCIe bus and go through the CPU, the theoretical maximum speed is capped well below what direct GPU-to-VRAM access would allow. This limitation has led some to question the practical utility of the approach compared to simply adding more system RAM, which has become relatively affordable.

Using precious vram to store files is a special kind of humor. especially since someone actually implemented it.

Practical Considerations and Use Cases

The community discussion reveals several practical concerns about using VRAM as a filesystem. One significant issue is power management - using VRAM for storage would prevent the GPU from entering lower power states, potentially increasing system power consumption. While some GPUs can selectively power portions of memory while keeping others active, the implementation details vary across hardware.

Another concern relates to using VRAM for swap space. While technically possible, multiple users have reported system freezes when attempting this, as GPU management processes themselves might be swapped out, leading to unrecoverable page faults. This highlights a broader challenge with swap spaces on any driver-dependent storage medium.

Despite these challenges, some niche use cases do exist. For systems with limited RAM but decent GPUs, vramfs could provide additional high-speed storage. There's also speculation about potential performance benefits for specific GPU-accelerated workloads that could operate directly on data stored in a VRAM filesystem.

For most users, however, the consensus seems to be that adding more system RAM represents a more practical and cost-effective solution. As one commenter noted, 192GB of system RAM costs approximately $500 USD, while equivalent GPU VRAM would cost around $40,000 USD - making the choice straightforward for those simply seeking more high-speed storage.

While vramfs may not revolutionize storage technology, it represents the kind of creative experimentation that drives innovation in computing. As one commenter aptly put it, projects like these embody the don't ask why, ask why not philosophy that continues to push the boundaries of what's possible with existing hardware.

Reference: vramfs