The development of specialized OCR (Optical Character Recognition) systems for machine learning has sparked important discussions about AI reliability, data integrity, and ethical considerations. A recently shared OCR system designed specifically for extracting structured data from complex educational materials has become the center of a nuanced community conversation about the benefits and risks of using generative AI in document processing pipelines.

|

|---|

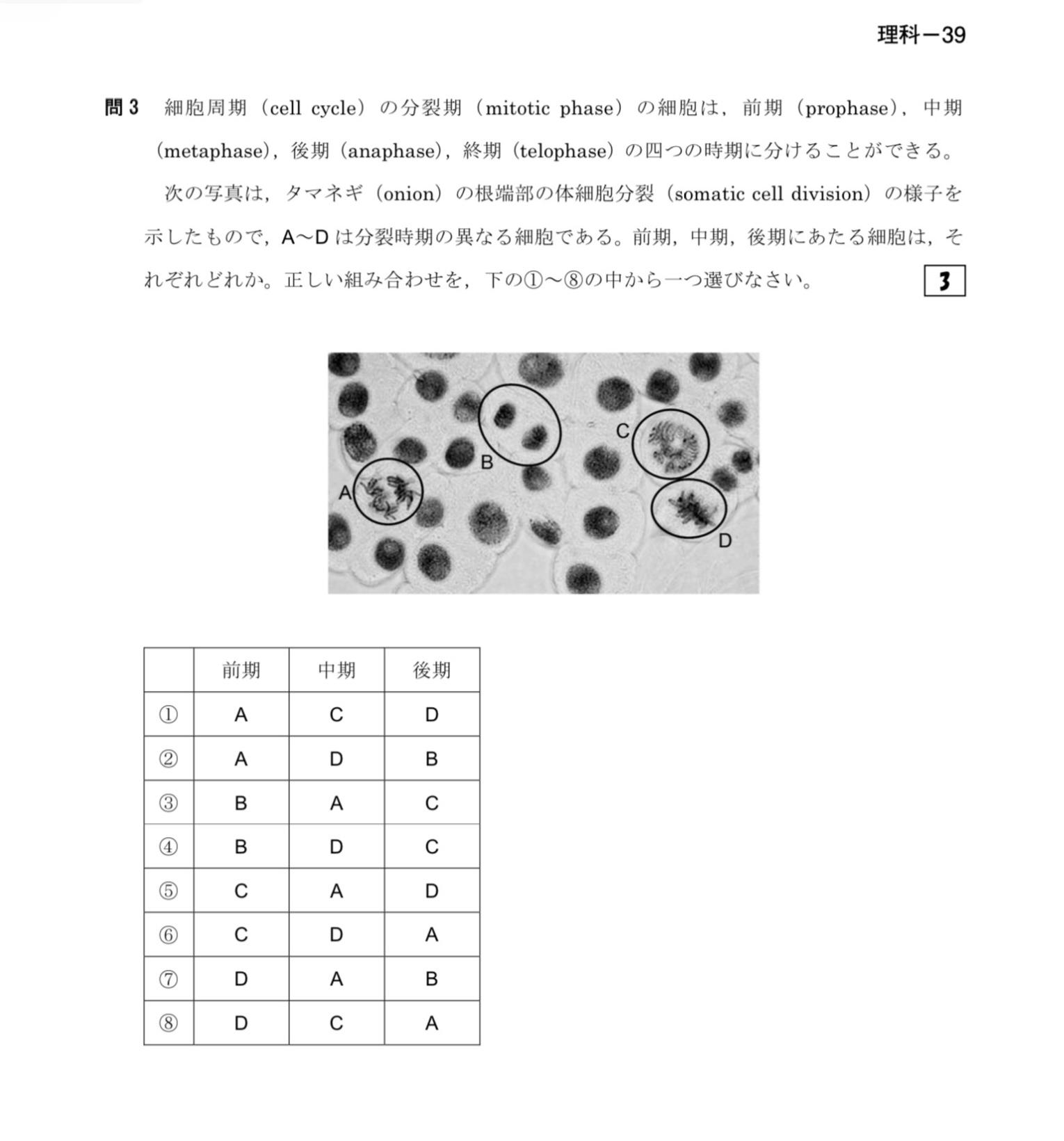

| This illustration explains the stages of mitosis in plant cells, highlighting the importance of structured data extraction in educational materials |

Hallucination Concerns with AI-Enhanced OCR

Community members have raised significant concerns about the reliability of using Large Language Models (LLMs) in OCR processes. The primary worry centers around AI hallucination - where models might not only fix genuine OCR mistakes but also inadvertently alter correct content or generate entirely fabricated information. One commenter compared this risk to the xerox bug on steroids, referencing a historical issue where scanned documents had digits unintentionally swapped, but with potentially more severe consequences when AI is involved.

The developer acknowledged these concerns, noting they've implemented a two-stage approach where traditional OCR engines handle the initial text extraction, with generative AI only applied in a second refinement stage. They also mentioned implementing simple verification checks to prevent altering correctly extracted text, though the effectiveness of these safeguards remains to be thoroughly evaluated.

Prompt Injection Vulnerabilities

Security-minded commenters highlighted prompt injection as another potential risk area. With LLMs serving as part of the processing pipeline, there's an inherent challenge in maintaining clear separation between instructions and the data being processed. This could potentially allow malicious content in documents to manipulate the system's behavior.

The developer responded that they're attempting to mitigate this risk by using JSON formatting to separate instructions from data and running the system in a sandboxed environment. However, they acknowledged this approach isn't perfect, suggesting that security concerns remain an ongoing area for improvement.

Open Source and Licensing Questions

The project's licensing structure also came under scrutiny. While initially released under an MIT license, community members pointed out potential incompatibilities with some of the incorporated components - specifically the DocLayout-YOLO model which uses the more restrictive AGPL-3.0 license. This highlights the complex licensing landscape that AI-hybrid systems must navigate, especially when combining multiple open-source components with different requirements.

The developer appeared surprised by this licensing conflict, promptly acknowledging the oversight and committing to review the license requirements more carefully - demonstrating the challenges developers face in properly managing the legal aspects of AI system development.

Language Translation and Communication Challenges

An interesting meta-discussion emerged around the developer's use of LLMs to help craft their responses to community comments. When questioned about their suspiciously polished writing style, the developer revealed they were a 19-year-old Korean student using AI assistance to communicate more clearly in English. This sparked a broader conversation about the legitimacy of using AI as a communication aid versus maintaining authentic personal expression.

Some community members defended this use case as perfectly reasonable - comparing it to using a keyboard or spellchecker to enhance communication - while others expressed concern about the increasing homogenization of online discourse through AI-mediated communication.

Future Directions for OCR in Machine Learning

Despite the concerns, many commenters recognized the value of the project's core goal: improving the quality of training data for machine learning by extracting structured information from complex documents. One commenter highlighted that organizing extracted data into a coherent, semantically meaningful structure is critical for high-quality ML training, suggesting that semantic structuring beyond basic layout analysis represents the next frontier for maximizing OCR data value in ML training pipelines.

The developer indicated plans to expand the system's capabilities in this direction, adding modules for building hierarchical representations and identifying entity relationships across document sections.

As AI continues to be integrated into document processing workflows, the community discussion around this OCR system highlights the delicate balance developers must strike between leveraging AI's capabilities and addressing legitimate concerns about data integrity, security, and ethical use. The conversation demonstrates how open sharing of AI tools can lead to valuable community feedback that ultimately improves the technology for everyone.

Reference: OCR System Optimized for Machine Learning: Figures, Diagrams, Tables, Math & Multilingual Text

|

|---|

| Structured visual data representation is essential for improving the quality of training data in machine learning |