Simon Willison recently released a new plugin called llm-hacker-news for his command-line LLM tool, designed to pull content from Hacker News discussions and feed them directly to large language models. While the technical achievement has impressed many, it has also ignited a significant debate about data privacy, consent, and the ethics of using public forum content for AI processing.

The Technical Innovation

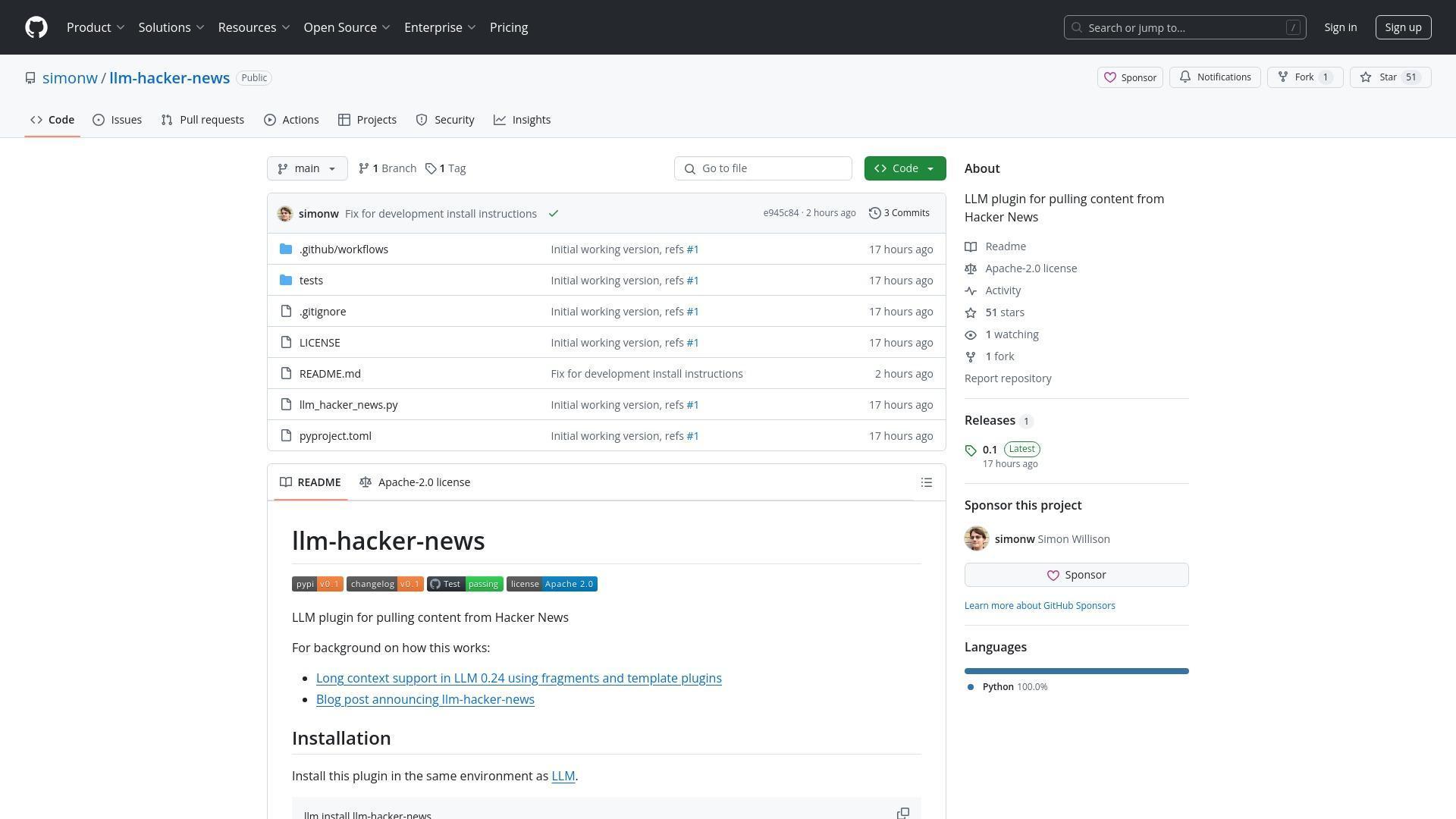

The llm-hacker-news plugin builds on a new fragments feature that Willison added to his LLM command-line tool. This feature allows users to easily feed long-context content into large language models like Gemini and Llama 4. The plugin specifically enables users to retrieve entire Hacker News discussion threads using a simple command syntax:

llm -f hn:43615912 'summary with illustrative direct quotes'

The plugin fetches data from the Hacker News API, converts it to an LLM-friendly text format, and sends it to the user's configured default model (such as gpt-4o-mini). This streamlines the process of analyzing and summarizing online discussions without manual copying and pasting.

Key Features of llm-hacker-news plugin:

- Allows fetching entire Hacker News threads using item IDs

- Built on the new "fragments" feature in the LLM command-line tool

- Uses Algolia JSON API to retrieve content

- Converts HN content into LLM-friendly text format

- Works with various LLM models including GPT-4o-mini, Claude, and Gemini

Installation and Usage:

llm install llm-hacker-news

llm -f hn:43615912 'summary with illustrative direct quotes'

Related Community Concerns:

- Data privacy and consent for content processing

- Terms of Service compliance

- Distinction between human reading vs. algorithmic processing

- Future of content ownership in an AI-powered internet

Privacy Concerns and Consent

The release has sparked a heated discussion about whether users should be able to opt out of having their forum posts processed by LLMs. One user directly asked: Is there a way to opt out of my conversations being piped into an LLM? This question reflects growing concerns about content scraping and usage rights.

The concern here is that people aren't happy that LLM parasites are wasting their bandwidth and therefore their money on a scheme to get rich off of other people's work.

Willison responded by pointing out the practical impossibility of preventing copy-paste actions, noting that even if such restrictions existed, screenshots could circumvent them. He also highlighted the complex landscape of LLM training policies, where some providers like OpenAI and Anthropic don't train on API-submitted content, while others like Gemini might use data from free-tier users to improve products.

Legal and Ethical Boundaries

Several commenters raised questions about whether the plugin violates Hacker News' terms of service, which prohibit scraping and data gathering. One user specifically cited the HN guidelines: Except as expressly authorized by Y Combinator, you agree not to modify, copy, frame, scrape, [...] or create derivative works based on the Site or the Site Content.

The discussion revealed a fundamental tension between public accessibility and content ownership. While some argued that posting on public forums implicitly consents to various forms of consumption, others maintained that there's a meaningful distinction between human reading and algorithmic processing, especially when that processing might eventually feed into commercial AI training datasets.

The Future of LLM Tools

Despite the controversy, many users expressed interest in the technology's potential. Requests for expanded functionality included summarizing favorite topics on HN, tracking discussions over time, and integrating with other protocols like MCP (Message Context Protocol). Willison mentioned that his next big LLM feature is going to be tool support, with plans to build an MCP plugin on top of that framework.

The discussion also touched on the rapidly improving quality of local LLM models. Willison noted that local models were mostly unusably weak until about six months ago but have recently become much more capable, with models like Qwen Coder 2.5, Llama 3.3 70B, Mistral Small 3, and Gemma 3 now performing impressively on consumer hardware with sufficient RAM.

The llm-hacker-news plugin represents both the exciting technical possibilities and the complex ethical questions that arise as AI tools become more integrated into our online experiences. As these technologies continue to evolve, the community will need to navigate the balance between innovation and respecting user agency and content rights.

Reference: Ilm-hacker-news